Bring GenAI In-House — Without the Complexity

A smarter way for SMBs to take control, cut costs, and scale AI on your terms

You don’t need a massive cloud budget or a data center team to leverage generative AI. BCM and its broad network of US-based system integration partners make it possible for small and mid-sized businesses to deploy powerful on-premise GenAI servers — with security, performance, and flexibility built in and built in the USA.

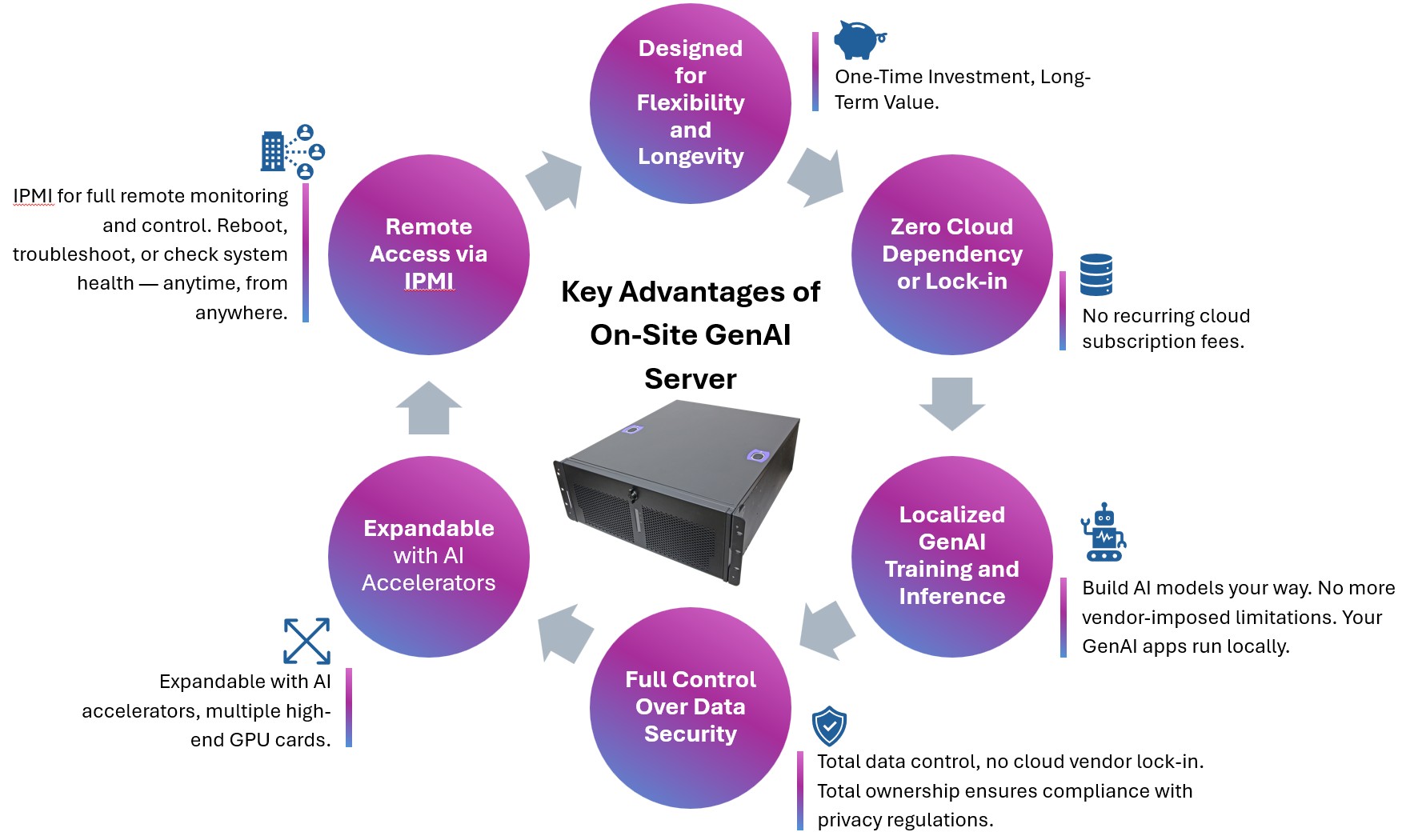

BCM On-Site GenAI Server - Custom-Built for Small and Medium Businesses

BCM and our team of system integration partners deliver a scalable, customizable GenAI solution built on our proven HPM-ERSUA (Single CPU), HPM-ERSDE or HPM-GNRDE (Dual CPU) hardware platforms and integrated with DeepMentor’s FineTune Expert (Trial). Designed for long-term deployment with 7+ years product lifecycle and full system control.

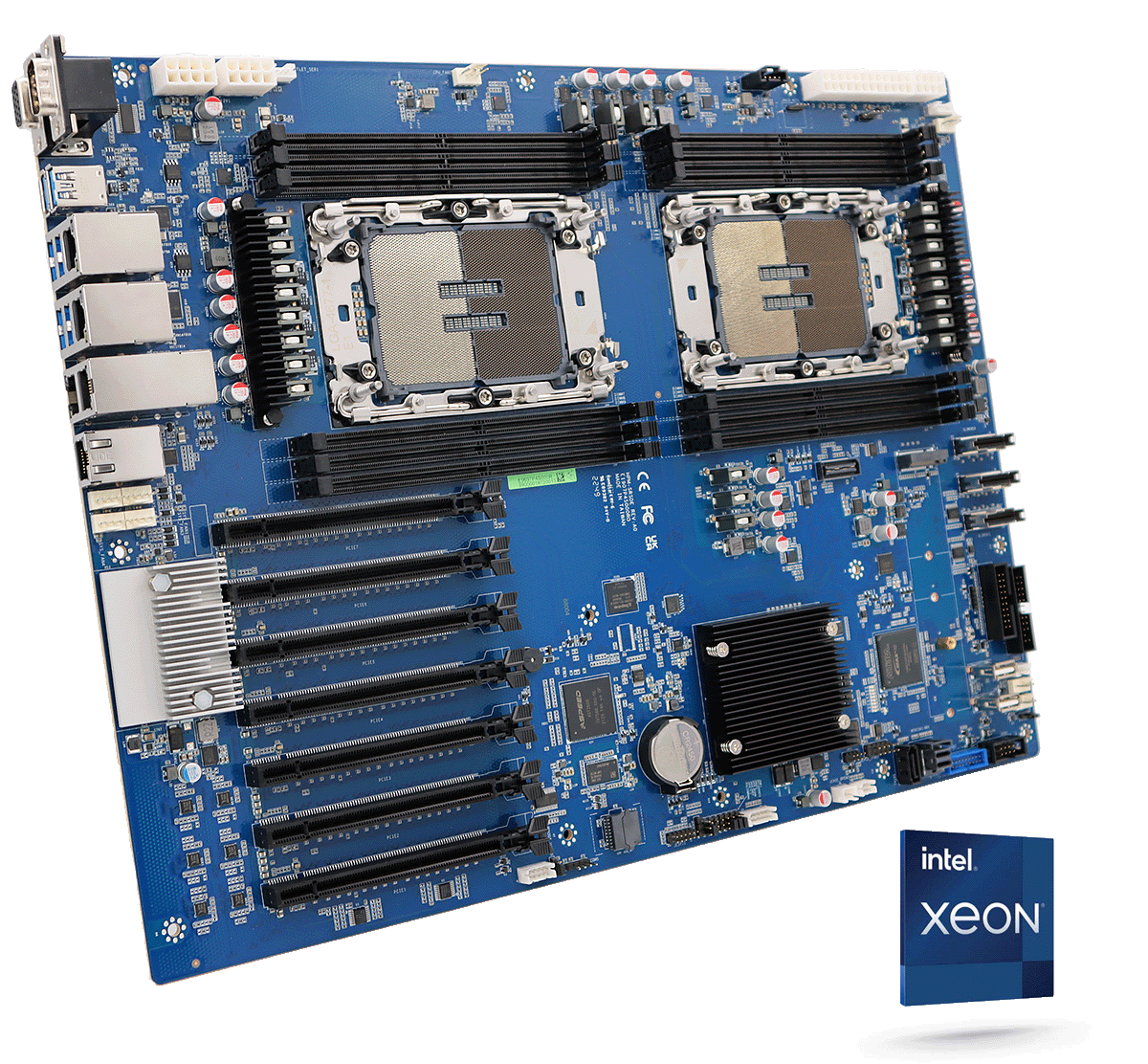

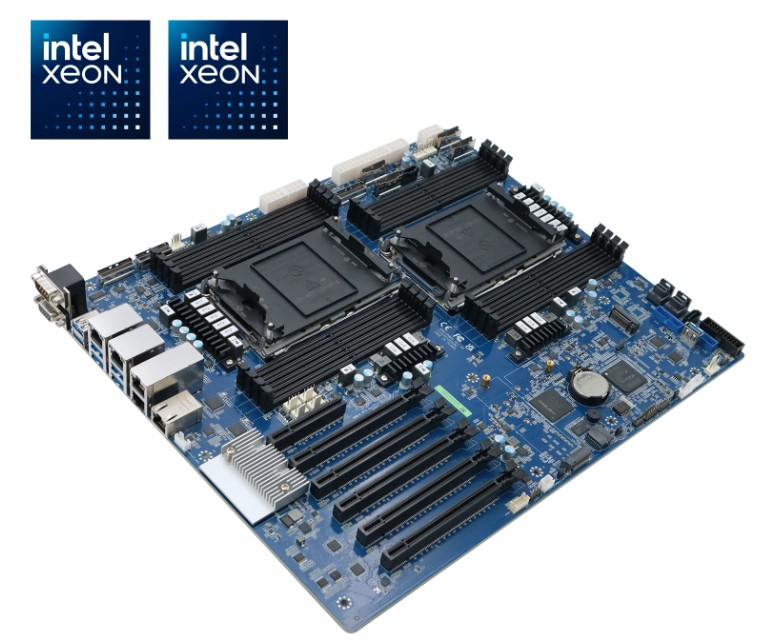

Hardware Platform:

- HPM-ERSUA (Single CPU), HPM-ERSDE or HPM-GNRDE (Dual CPU) High-performance Server Motherboard

- Support for high-performance Intel® Xeon® CPUs up to 350W TDP

- 6, 12, 16 DDR5 DIMM slots support RDIMM/MRDIMM up to 4TB

- Gen5 PCIe x16, x8, and x4 support multiple double-wide GPUs cards

- M.2, SATA RAID, Slim SAS, MCIO for various storage capability

- 4 LAN Ports, 2.5GbE and 10GbE

- IPMI Remote Management

- Long-term support: 7+ years

-

Sturdy 1U/2U/3U/4U/Custom rackmount chassis or desktop-type server station

|

|

|

Use Cases Examples:

🧬 Biotech / Lab / R&D:

Lab notebook processing; Data interpretation; Protocol drafting; Multilingual reports; Domain-specific Q&A

🔹 Example: Pharmaceutical research teams have implemented internal LLMs trained on scientific papers to accelerate preclinical literature reviews and draft experimental protocols. Some biotech startups use private GPT-based models for lab notebook analysis to assist in experimental design.

🏭 Manufacturing / Industrial:

Maintenance Q&A bot; SOP summarization; Technician training generator; Edge AI for factory floor inference; Visual inspection assistance

🔹 Example: Factories using Intel-based edge devices deploy models locally to detect production issues, summarize maintenance logs, or guide technicians through troubleshooting steps. These are often offline-capable, particularly in environments with strict data isolation policies.

🖥️ Software / Tech Startups:

LLM fine-tuning for proprietary tools; Support ticket summarization; Dev documentation Q&A; Language model integration testing; Offline inference deployment

🎓 Education / Training:

AI-assisted course materials; Quiz/question generation; Document simplification; Chat tutor system; Content translation

For more product information please contact your BCM Regional Sales Managers or contact us BCMSales@bcmcom.com. For custom design, please visit our OEM/OEM Design Services.